Workers can unlock the artificial intelligence revolution

Employers and artificial intelligence developers should ensure new technologies work for workers by making them trustworthy, easy to use and valuable

Artificial intelligence (AI) has the potential to boost firm-level labour productivity by three to four per cent, and therefore significantly impact economic growth in Europe. However, only four in ten European businesses have so far adopted an AI technology, most commonly in areas such as fraud detection or warehouse management.

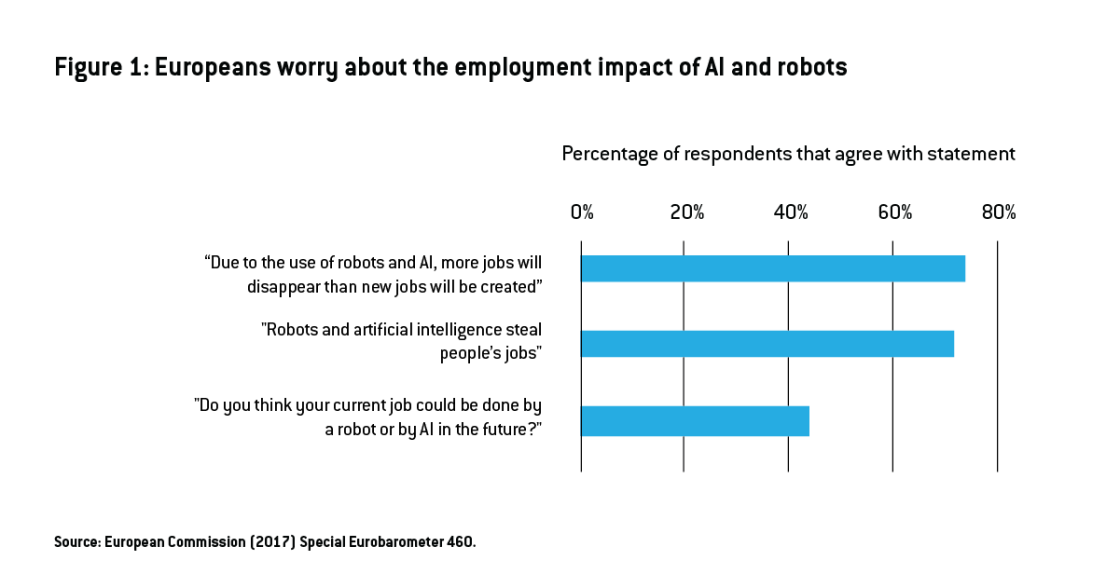

One reason why AI take-up in European firms might be slower than it could be is hesitance among workers to accept AI and other smart technologies at work. The underutilisation of technology by employees is considered a crucial factor in explaining the ‘productivity paradox’, or the phenomenon of productivity stagnation despite hugely increased technology uptake, because simply providing a new technology does not necessarily lead to its adoption by workers. The percentage of Europeans comfortable with having a robot assist them at work decreased from 47% in 2014 to 35% in 2017, a statistic that seems partly driven by employment concerns: 74% of Europeans expect that AI will destroy more jobs than it creates, and 44% of workers think their current job could at least partly be done by a robot or AI (Figure 1). This worry is greatest among low-skilled manual workers and white-collar workers, confirming research that people in professions at risk of automation are more fearful about the future.

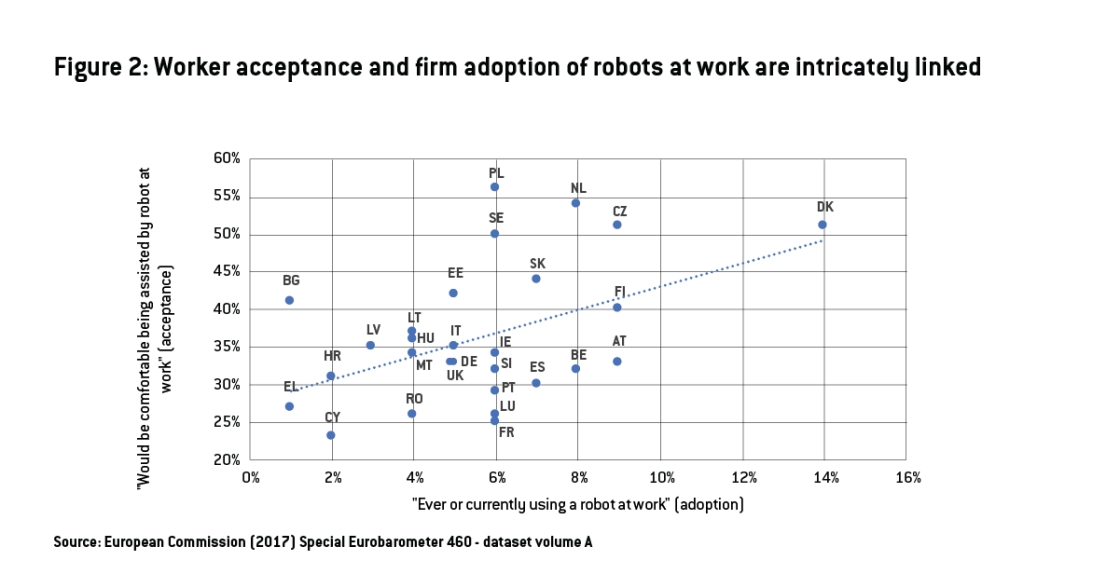

European data linking firm-level AI adoption and worker-level AI acceptance is scarce, but Eurobarometer provides some evidence about worker acceptance and adoption of new technologies at work. Workers in countries with greater acceptance of robots at work also report greater exposure to the adoption of workplace robots (Figure 2). Causality probably runs both ways (as exposure to robots also increases acceptance through learning effects over time), but this data indicates that worker acceptance and adoption by firms of new technologies are intricately linked (with a correlation of 0.47).

To make the most of AI, both employers and workers need to be able to see its potential. The literature on technology acceptance in the workplace can guide policymakers and businesses to help workers accept AI and other smart technologies in the workplace.

Accepting technology at work: what do I have to gain and how much will it cost me?

A worker’s decision on whether and how to use a new technology depends on two factors: the technology’s perceived usefulness and its perceived ease of use. Perceived usefulness is defined as “the degree to which a person believes that using a particular system would enhance his or her job performance” and perceived ease-of-use is defined as “the degree to which a person believes that using a particular system would be free from effort”. If a worker believes the technology offers a lot for little effort, they will be more inclined to use it. Early studies showed that usefulness is a stronger predictor of uptake than ease-of-use: “Users are often willing to cope with some difficulty of use in a system that provides critically needed functionality”. However, no amount of ease-of-use can compensate for a useless system.

Workers judge the first factor, perceived usefulness, by comparing the system’s capabilities to their duties in the workplace. A worker values a technology more when it is relevant for their tasks, produces quality outputs and its results can be easily demonstrated. Social processes also influence the perceived usefulness. If the technology appears to enhance one’s status or if co-workers or supervisors expect a person to use the new technology, the worker’s perception of the system’s usefulness will increase.

The second factor, perceived ease-of-use, depends on the worker’s pre-conceived beliefs about a technology, and the adjustments to those beliefs over time as a result of gaining direct experience with the system. A worker anchors their beliefs based on their computer literacy, existing organisational support resources, intrinsic motivation to use computers and any computer anxiety. Once the worker experiences the actual technology, they adjust their beliefs based on the actual enjoyment of using the technology (aside from any performance consequences) and its objective usability (the actual level, rather than perception, of effort required to complete specific tasks).

The importance of organisational support for technology uptake increases over time, as more of the available support infrastructure gets used and assistance for concrete, on-the-job use becomes more relevant. Gender, age, experience and willingness to use have moderating effects on the above-mentioned factors.

Accepting robots and AI in the workplace

Algorithms in the workplace are not new. They have been used for optimisation (such as scheduling and inventory management) and prediction (such as demand forecasting, credit-risk analysis and personalised marketing) for several decades. The main differences between these old-school algorithms and current AI applications at work are: who is interacting with the algorithm, and how and when.

In the past, algorithms were mostly handled by statisticians or computer engineers in back-office departments. The outputs of algorithms had an impact on front-line workers (for example, by determining their monthly schedule), but those workers were usually unaware of the system’s existence. Now, many AI applications involve front-line workers interacting daily with algorithms on computers, smartphones and wearable devices. Also, in the past, algorithms acted over longer periods: scheduling and forecasting was done in advance and provided some form of stability for workers. Now, fast and accessible algorithms can make minute-to-minute adjustments and react to the workers’ environment in real time (for example Uber’s surge pricing algorithm).

Research on the acceptance of robots and AI is still in its infancy and is characterised by small-sample studies. Workers’ perspectives are underrepresented. A few critical factors emerge nonetheless. For one, workers have been found to avoid using AI systems that are burdensome, for example, by increasing workloads or by sending excessive amounts of recommendations and alerts.

Similarly, the impenetrable nature of algorithms can be a barrier to adoption by workers. In the healthcare sector, not understanding why an AI’s recommendation differs from one’s own assessment prevents integration of its use into daily routines. The inability to judge the correctness of the system’s decision leads to mistrust and reduces its perceived usefulness. Obscure accountability for medical decisions makes workers uncomfortable about relying on a system with low levels of transparency. This accountability issue is just as important when workers themselves are data sources, for example, with algorithmic management. In this case, the scepticism caused by the system’s lack of transparency is reinforced by privacy concerns and doubts about data security, which can make workers less willing to adopt the technology.

When robots are involved, additional concerns about physical wellbeing arise. In workspaces with active human-robot collaboration, as in manufacturing, even minor robot malfunctions can lead to severe human injuries. Therefore, safety considerations are crucial determinants of industrial workers’ attitudes towards robotics in their workplace.

Individual job security concerns and overall adverse labour market effects are important factors in people’s attitude towards robots at work. At the same time, workers base their attitudes on the robots’ impact on their day-to-day jobs. For example, human-robot interaction may replace human-human interaction, reducing communication and collaboration between co-workers. Or workers may need to reallocate time from job-specific tasks to monitoring the robot at work, leading to deskilling and depreciation of operational knowledge.

How to increase technology acceptance

Employers can ensure technologies work for workers, not against them, by making them easier to use and making them more useful in employees’ day-to-day work. Interventions to increase user acceptance can occur before and after implementing a new technology.

Pre-implementation interventions focus on enabling accurate perceptions of usefulness and ease of use by providing a realistic preview of the system. This involves identifying and communicating specific use cases, such as operational problems or business opportunities that can be addressed by AI. It also includes explaining the specific choice of technology with a transparent assessment of its benefits over other technical solutions. Enhancing the interpretability of models, for example by visualising which data influences the model’s output, can reduce user uncertainty and increase trust. In addition to management buy-in and high software engineering standards, two further factors increase technology acceptance pre-implementation: user participation and incentive alignment.

User participation, or involvement in the design, development and implementation of the system, helps users form judgments about the system’s eventual relevance to their jobs, the output quality and demonstrability of results. This can be achieved through hands-on activities including system evaluation and customisation, prototype testing and business process change initiatives. In the specific case of AI, workers from different backgrounds could be involved in establishing anti-discrimination protocols that ensure the system has no underlying bias.

Incentive alignment ensures that the effective use of the system, as envisioned by the employer, aligns with workers’ own interests and incentives. These incentives should be regarded more broadly than just monetary rewards, but extend to the fit between a technology and a worker’s job requirements and value system. For example, if using the technology does not benefit a worker or her direct co-workers but instead benefits members from other work units, the user will perceive a lack of incentive alignment that may lead to low use of the system.

Post-implementation interventions, in turn, focus on supporting the transition and adaptation to the new system. Training is the most critical intervention for greater user acceptance and system success. To reduce the perceived impenetrability of AI systems, organisations should invest in the data literacy of their workers. Firms can further support workers by providing the necessary infrastructure for using the technology, creating dedicated helpdesks and providing business process experts. Finally, worker’s peers can provide support as well: they can assist with formal or informal training and can help with direct modification of the system or work processes.

Employers can step in to ease worker worries about AI’s specific employment effects. While replacing workers is not the main objective of most AI adopting businesses, employers can focus more on augmenting workers’ value through enhanced insights or learning. When job losses are unavoidable, employers can invest in retraining their workers for other opportunities within the firm.

Policy interventions to address concerns over loss of employment can include: creating safety nets with reskilling and transitioning programmes, individual learning accounts, unemployment benefits and universal basic income. To increase worker trust in AI systems, the regulation of AI itself and a focus on increasing worker data literacy are both essential to make sure they are ready to accept, adopt and allow AI to reach its full economic potential.

Recommended citation:

Hoffmann, M. and L. Nurski ‘Workers can unlock the artificial intelligence revolution’, Bruegel Blog, 30 June 2021

This blog was produced within the project “Future of Work and Inclusive Growth in Europe“, with the financial support of the Mastercard Center for Inclusive Growth.